GOAT: GO to Any Thing

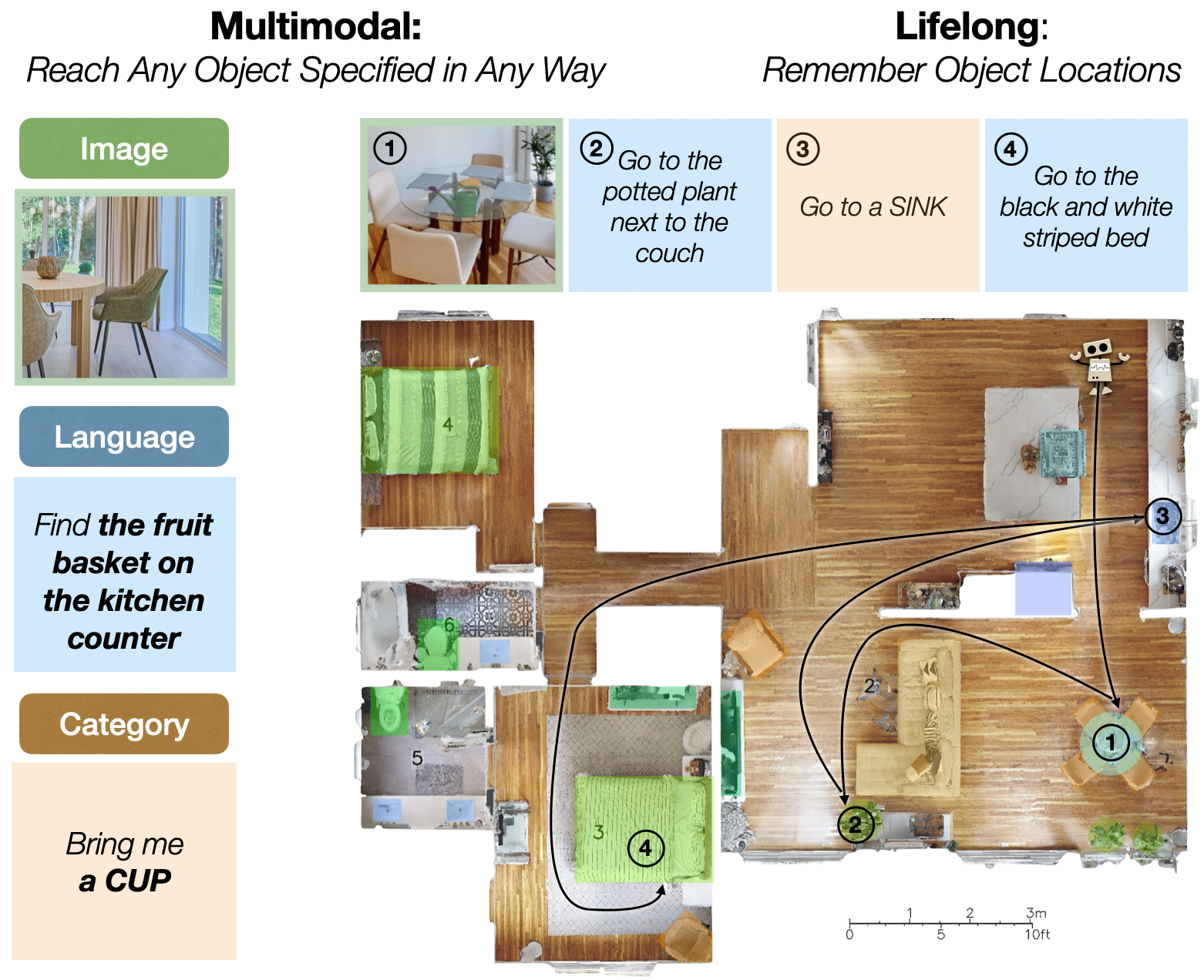

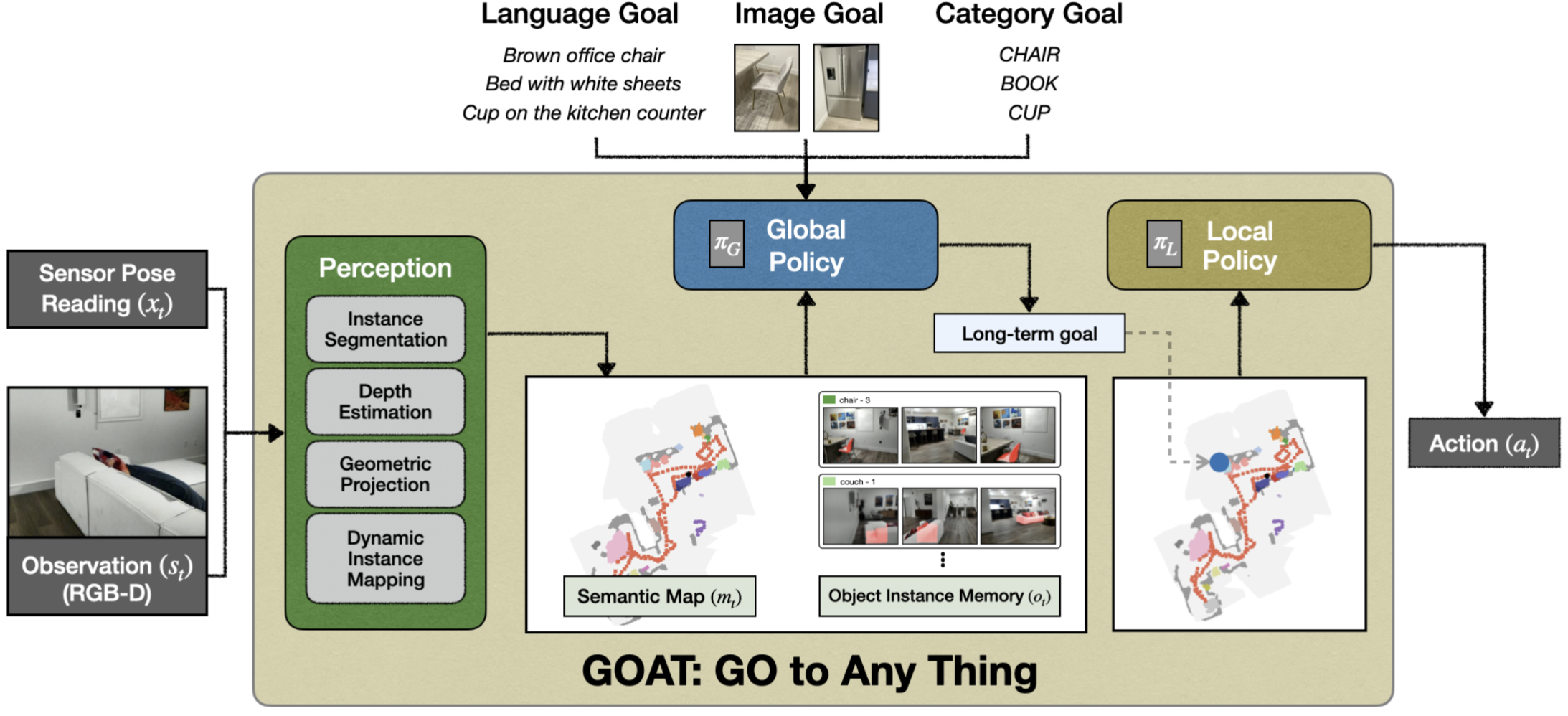

We present the Go To Anything agent: the first universal navigation system that can search for and navigate to any object however it is specified - as an image, language, or a category - in completely unseen environments, without requiring pre-computed maps or locations of objects.

GOAT Problem

Imagine dropping a robot in an unknown home and asking it pick up a cup from the coffee table and bring it to the sink. What makes this challenging? The robot needs to explore the environment, detect and localize relevant objects, remember their locations, and navigate to, pick, and place objects. In any realistic deployment setting, a general navigation primitive should be multitask and lifelong.

GOAT System

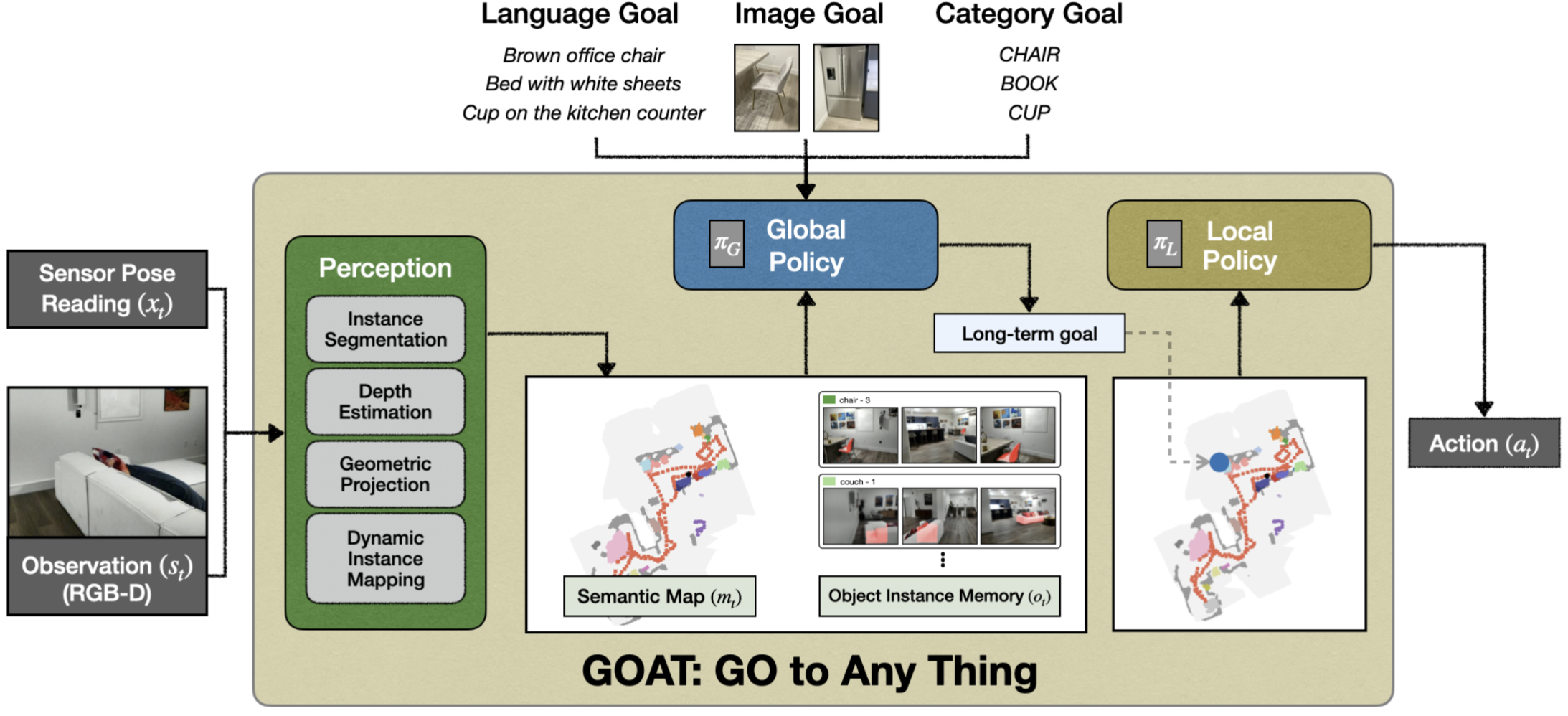

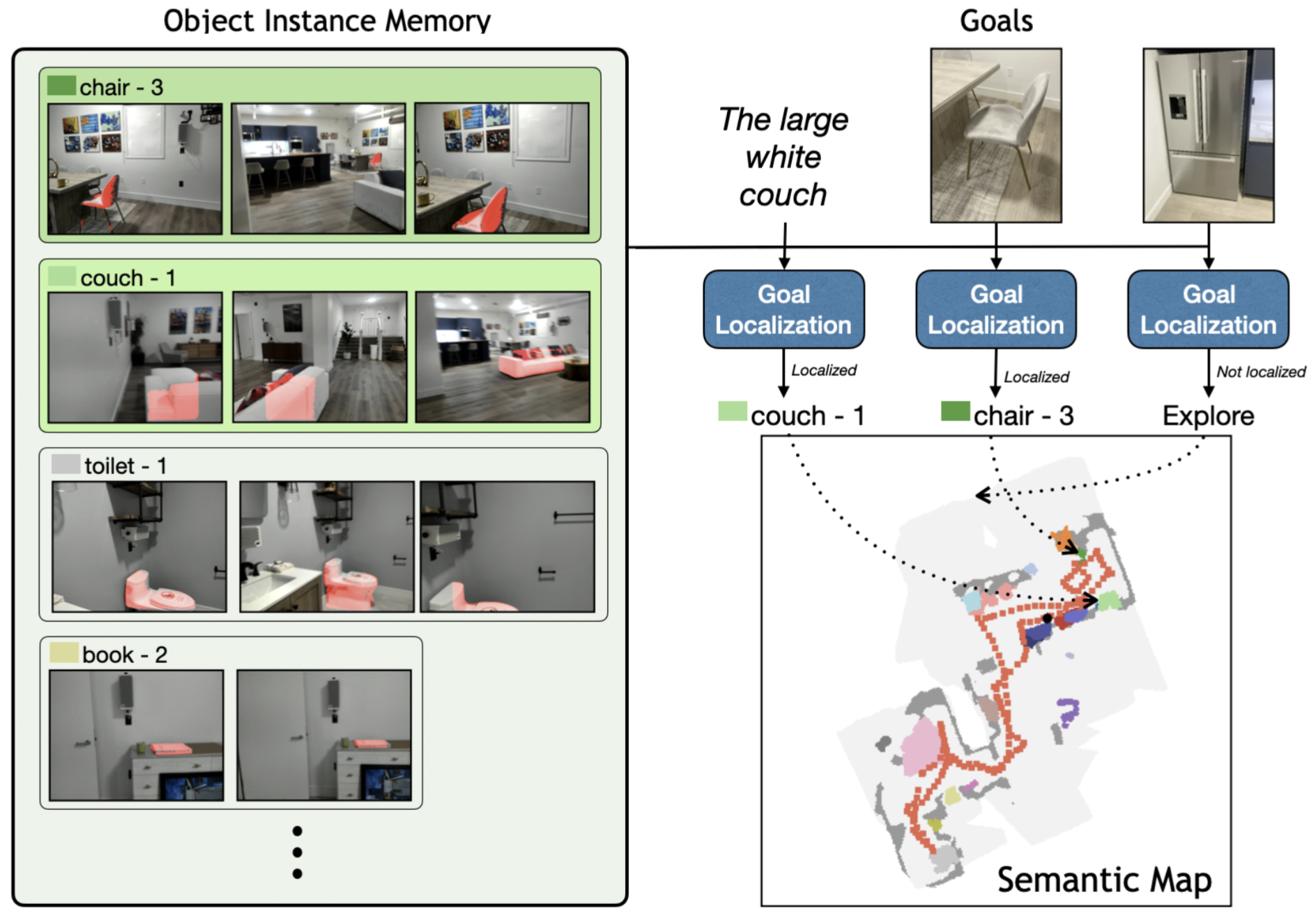

As the agent moves through the scene, the perception system processes RGB-D camera inputs to detect object instances and localize them into a top-down semantic map of the scene. In addition to the semantic map, GOAT maintains an Object Instance Memory that localizes individual instances of object categories in the map and stores images in which each instance has been viewed. This memory allows the agent to localize and navigate to any object instances that have been observed previously. A global policy takes this lifelong memory representation and a goal object specified as language, an image, or a category, and outputs a long-term goal in the map. Finally, a local policy plans a trajectory to achieve this long-term goal and outputs actions to be taken by the agent.

|

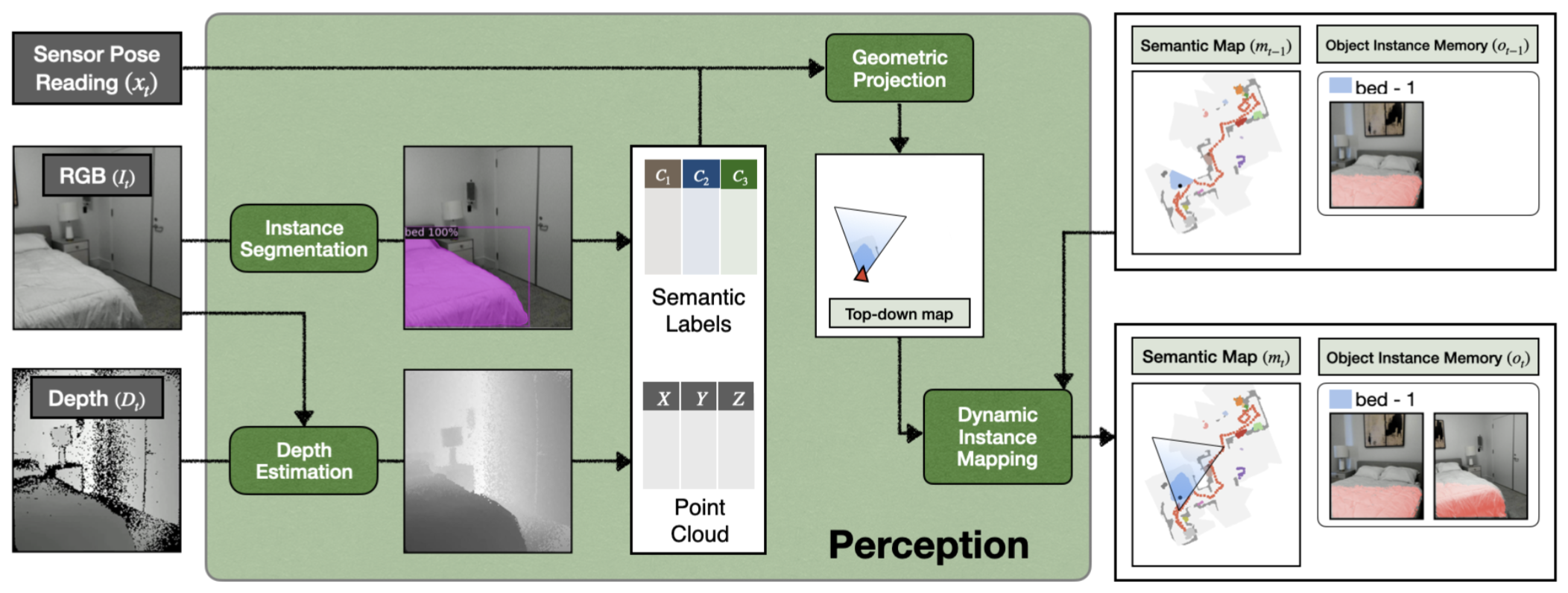

The perception system takes as input the current depth image, RGB image, and pose reading from onboard sensors. It uses off-the-shelf models to segment instances in the RGB image and estimate depth to fill in holes for reflective objects in raw sensor readings. Object detections are then projected and added to a top-down map of the scene. For each object detected, we also store the image in which the object was detected as part of the object instance memory.

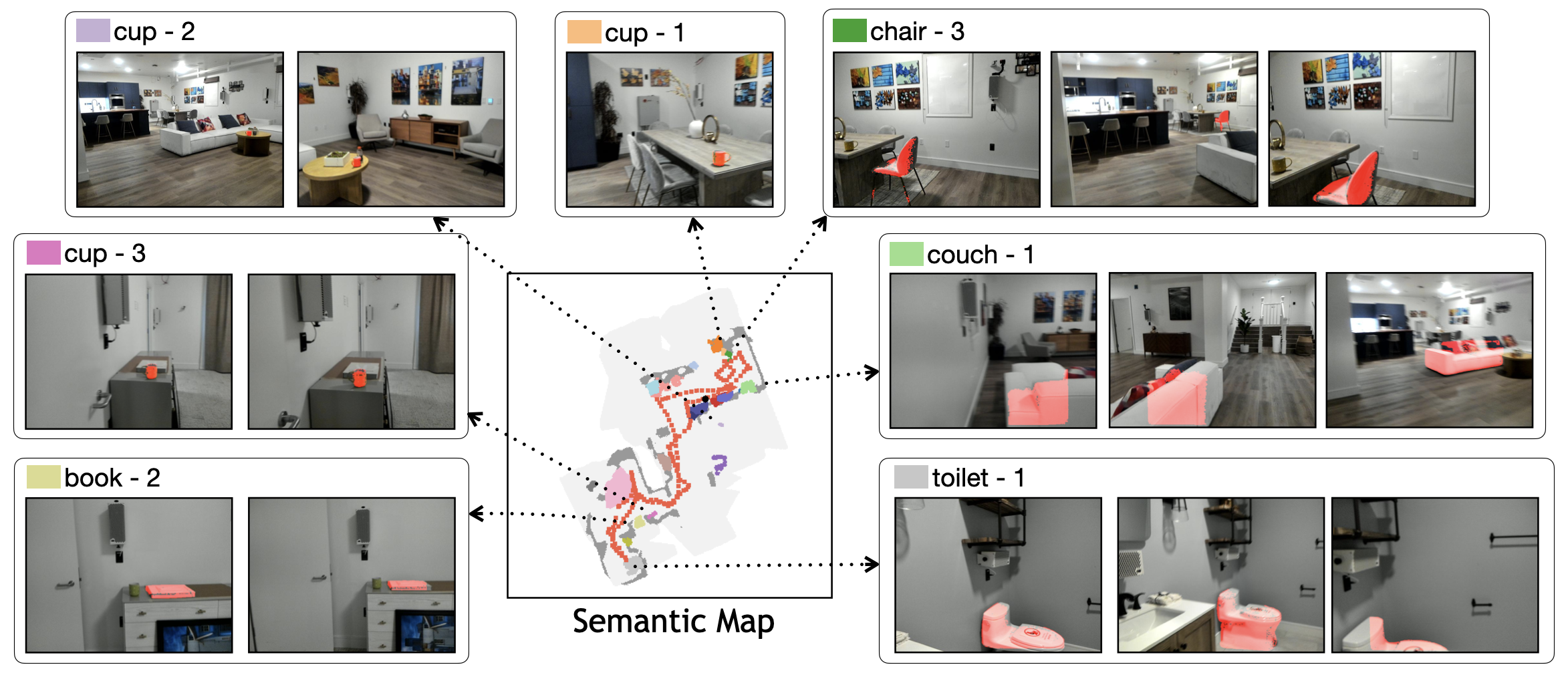

Our Object Instance Memory clusters object detections across time into instances using their location in the top-down map and their category. Each object instance is associated with the images in which it has been viewed. This allows new goals to be matched against all images of specific instances or categories. In addition, image features can be pooled within each instance to derive per-instance summary statistics.

When a new goal is specified, the agent first checks whether the goal has already been observed in the Object Instance Memory. The method for computing matches is tailored to the modality of the goal specification. After an instance is selected, its stored location in the top-down map is used as a long-term point navigation goal. If no instance is localized, the global policy outputs an exploration goal. We use frontier-based exploration, which selects the closest unexplored region as the goal.

The local policy simply uses the fast marching method to compute actions that move the agent closer to the long-term goal.

Large-scale "In the Wild" Empirical Evaluation

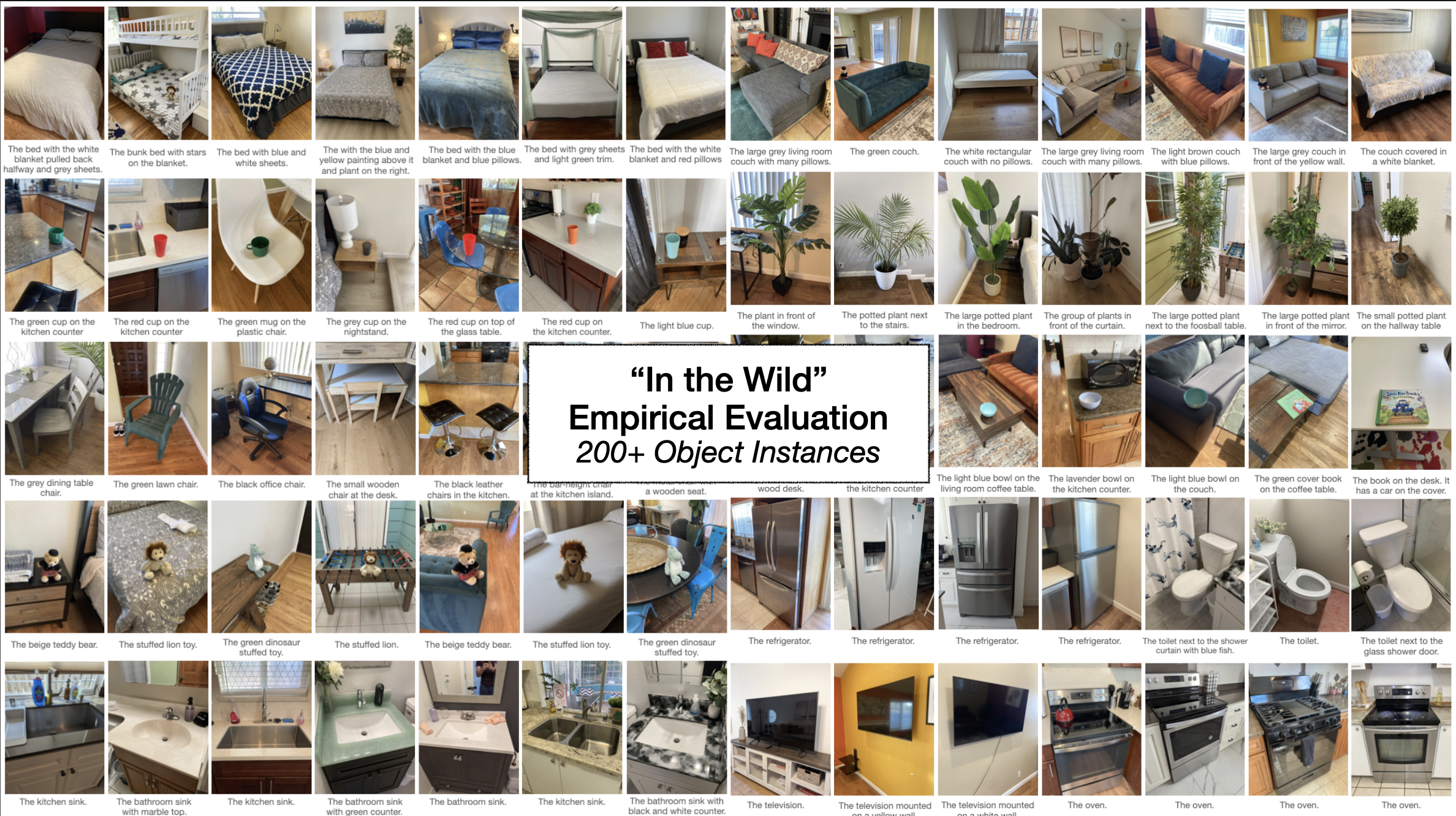

We evaluate goat “in the wild” in 9 unseen rented homes without pre-computed maps or locations of objects. We evaluate 4 methods - GOAT and 3 baselines - for 10 trajectories per home with 5-10 goals per trajectory, for a total of 90 hours of experiments.

In total, this represents over 200 different object instances given as image, language, or category goals.

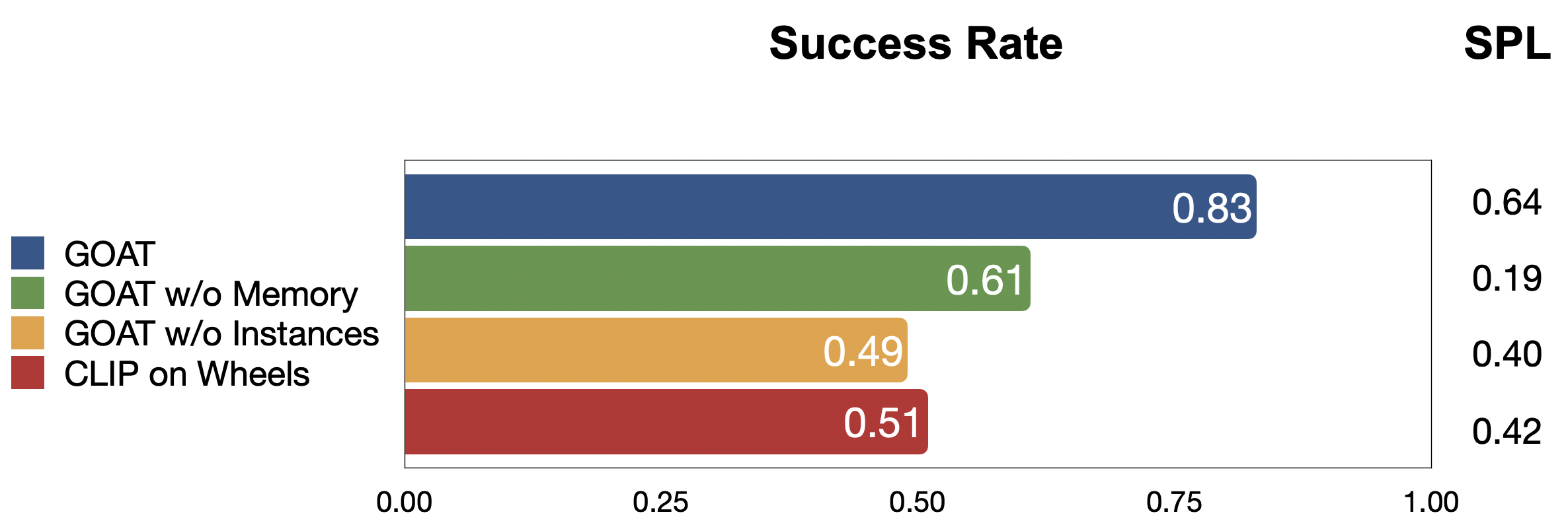

We compare approaches in terms of success rate per goal within a limited budget of 200 robot actions and Success weighted by Path Length (SPL), a measure of path efficiency. GOAT reaches 83% success rate and 0.64 SPL. Performance drop when removing the persistent memory, as the agent needs to re-explore the environment with every goal, or when removing the ability to distinguish object instances from the same category, or when using CLIP features of entire images to localize goals as suggested in prior work.

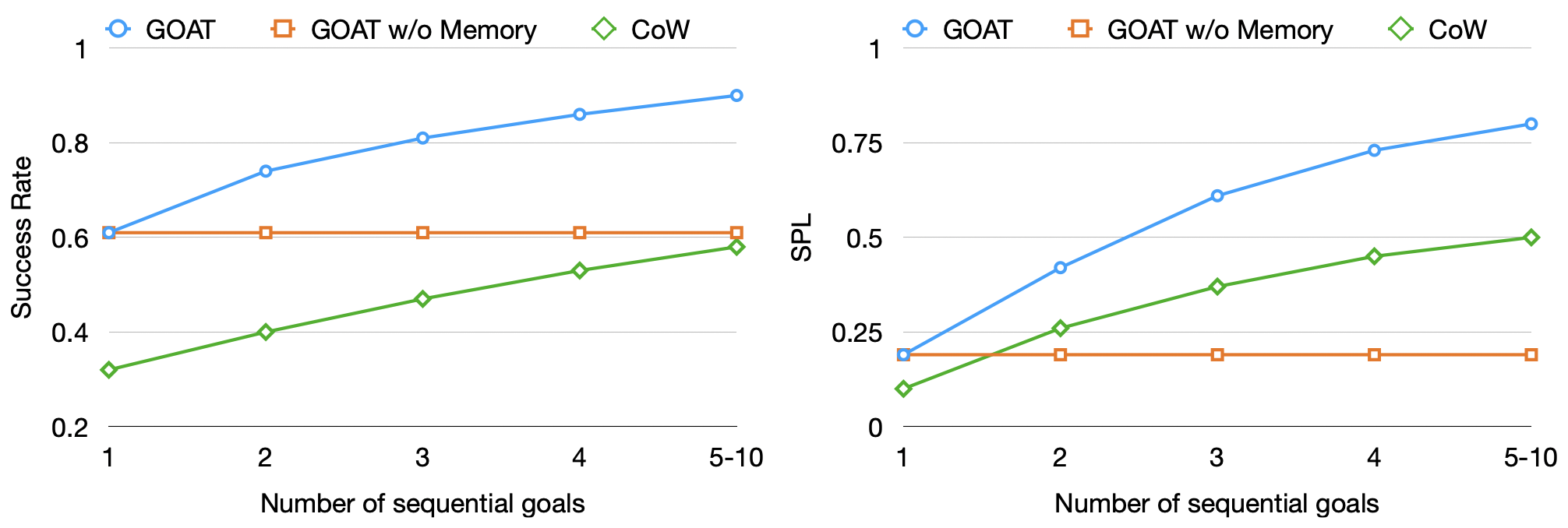

GOAT performance improves with experience in the environment: going from a 60% success rate (and 0.20 SPL) at the first goal to a 90% success rate (and 0.80 SPL) for goals 5-10 after thorough exploration. Conversely, GOAT without memory shows no improvement from experience, while COW benefits but plateaus at much lower performance.

Here you can see a full episode of the GOAT agent operating in an unknown real-world home.

Applications

As a general navigation primitive, the GOAT policy can be applied to downstream tasks like pick and place and social navigation. Here you can see it search for a bed, look for an image of a specific teddy bear, and bring it to the already localized bed.

Here you can see it plan around a person to reach the refrigerator, remove the previous location of the person from the map, and follow the person while updating their location.

Short Presentation